Tech Workers Can Still Fight Silicon Valley’s Overlords

February 20, 2025

Case That Could Inflict ‘Financial Ruin’ on Greenpeace Heads to Trial

February 20, 2025Germany goes to the polls on Sunday for an election that could prove transformative. The key player is the far-right Alternative for Germany (AfD) party, which won 10.4% of the vote in the last federal election. Current polling puts AfD at roughly twice that level, a result that would likely rank them second behind the center-right CDU/CSU and closer to real power than the party has ever been at the federal level.

Why does that matter? Well, you may remember Germany has something of a history with surging far-right parties. AfD leaders and members keep being discovered doing some pretty Nazi things: denying the Holocaust, plotting to overthrow the state, reclaiming Nazi slogans, planning mass deportations of German citizens of the wrong ethnicity, sharing Anne Frank “fresh from the oven” memes in private Facebook groups, and generally calling for a return to Völkisch nationalism. Even Marine Le Pen’s far-right National Rally party in France announced it wanted nothing to do with the AfD.

And indeed, Germany’s major parties have historically maintained a cordon sanitaire between the far-right and the political mainstream, refusing to collaborate with the AfD. That firewall held from the end of World War II until…earlier last month, when the conservative CDU teamed with the AfD to try to reduce immigration, leading to massive protests. Add in the ongoing chaos in Washington — and the shocking AfD endorsements from Elon Musk and J.D. Vance — and the world will be tuned into Sunday’s results.

Which is why a new study out today, by the international climate NGO Global Witness, is worth some attention. It looks at how the recommendation algorithms of Twitter, TikTok, and Instagram are affecting the political content that German users are seeing pre-election. The results were stark:

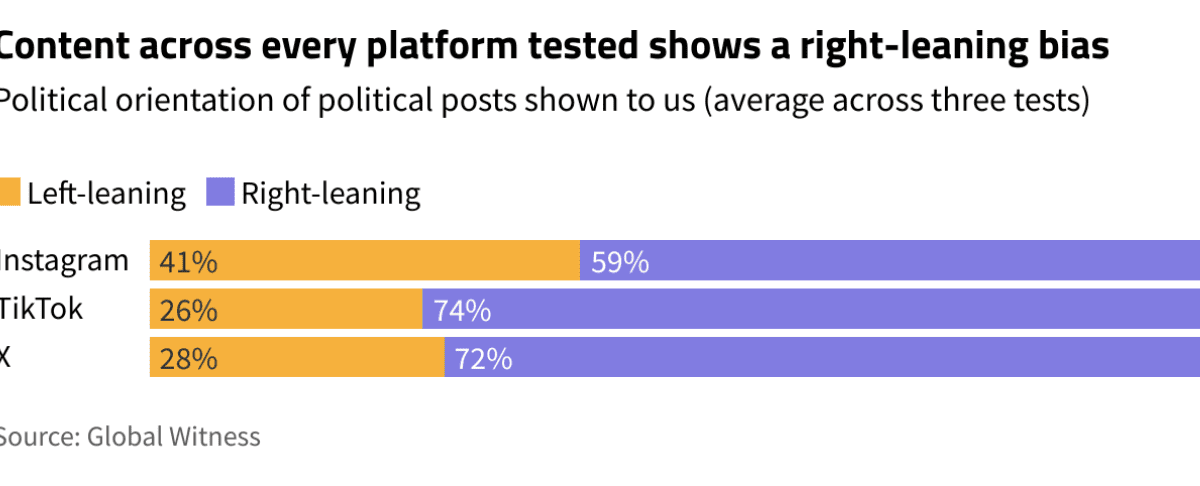

Non-partisan social media users in Germany are seeing right-leaning content more than twice as much than left-leaning content in the lead up to the country’s federal elections, a new Global Witness investigation reveals.At a time when Big Tech companies are increasingly under scrutiny for their influence on major elections, investigators studied what content TikTok, X and Instagram’s algorithms were recommending to politically interested yet non-partisan users during the election build up.

Here’s what they did. When normal humans look at their social feeds, what they see is heavily influenced by what the platform knows about them — who they’ve followed, what they’ve liked, how they’ve spent their time scrolling. If someone only follows Milwaukee Brewers players and only like Milwaukee Brewers posts, no one would be surprised if a social algorithm starts feeding them Milwaukee Brewers content. That makes it difficult to determine how much of what you’re seeing is the result of your past behavior or something baked into the algorithm itself.

To get around that, Global Witness created brand new accounts on factory-reset devices on all three platforms — identities with no past behavior for an algorithm to draw conclusions from. On each platform, they then followed only eight other users: the official accounts of Germany’s four major parties and the accounts of each party’s leader. For each of those accounts, they read or watched its five most recent posts. That should have let the platform know the accounts were interested in political content, but not expressed any partisan position.

They then went to each platform’s For You feed and started recording what they were being shown — classifying each post by whether it leaned right or left, whether it supported a particular party, and whether or not it was posted by one of those eight accounts they’d followed.

Nearly three-quarters of the political content they were shown on TikTok (74%) and Twitter (72%) was right-leaning, and the largest share of that content was pro-AfD. Instagram’s content also leaned to the right, but to a lesser degree (59%).

Remarkably, most of that rightward lean was driven by content from accounts the researchers weren’t following — that is, not those eight party and leader accounts. The scale of that content varied wildly by platform. On Instagram, for instance, 96% of the content served up came from one of those eight accounts. But on TikTok, that number was only 8%. And the non-followed accounts algorithms chose had a strong leaning.

You have control over the accounts you choose to follow. While there are ways to indicate to a platform what else you want to see, ultimately, what posts platforms feed to you are largely beyond your control. And in our test, the party political content chosen by the recommendation systems on TikTok and X was politically biased.

On TikTok, 78% of the partisan content shown was pro-AfD. (The other three parties: 8%, 8%, and 6%.) On Twitter, 64% was pro-AfD. (The other three: 18%, 14%, and 6%.)

Anyone who’s spent time on Elon Musk’s Twitter probably won’t be surprised to see this big a thumb on the scale. Social media platforms have long been criticized for feeding people content they’re predisposed to agree with, encasing them in a filter bubble. But at least that’s their filter bubble. These are filters being imposed from the outside.

This is, in theory, a fixable problem. You may remember, a few years back, seeing studies similar to this one, but about YouTube — specifically, about how its recommendation system was driving people toward ever more radicalized content. In 2019, YouTube announced it was changing its algorithm to reduce this push to more extreme material — “reducing recommendations of borderline content and content that could misinform users in harmful ways.” Within a few months, it reported that these changes had led to “a 70% average drop in watch time of this content coming from non-subscribed recommendations in the U.S.” Those videos were still available for people who wanted to find them, but YouTube sharply decreased how often it would actively push them to users. Follow-up studies have generally (though not universally) found that the radicalizing effects of the YouTube rabbit hole are now strongly diminished or gone altogether.

But YouTube had to want to make that change. (Or, more cynically, it needed to feel that a drumbeat of “YouTube videos made my kid join ISIS” stories were very bad PR.) An algorithm heavily weighted to far-right content might seem like a bug to most, but to Elon Musk, it is most certainly a feature. Will a TikTok that is trying to get in Donald Trump’s good graces see this sort of a skew as a problem or a selling point?

In French, a cordon sanitaire translates literally as a quarantine barrier — a structure erected to help prevent a sickness from spreading. Throughout the Covid-19 pandemic, societies worldwide debated endlessly about how strict restrictions should or should not be. Reasonable people can disagree on whether or how vigorously a party as extreme as the AfD should be kept out of mainstream politics. But what we’re seeing on social media platforms today isn’t that debate. Instead, it’s actively pro-virus — that extremism shouldn’t be shunned, it should be celebrated and promoted. On Sunday, we’ll learn the extent of the spread.

Great Job Joshua Benton & the Team @ Nieman Lab Source link for sharing this story.