S.E. Cupp: The Outrage Is the Point

March 10, 2025

March 2025 Trends, Rhetoric and Incidents of the Hard Right

March 10, 2025Over the past year, AI chatbots have been widely criticized for how poorly they cite news publishers, and how little traffic they drive to the publishers they do cite properly.

ChatGPT has often been at the center of this conversation. Last summer, I reported that ChatGPT frequently hallucinated fake URLs to news sites, even to articles from OpenAI’s own publishing partners. Research has continued to show that these citation issues are not limited to ChatGPT, but are in fact chronic across the AI industry.

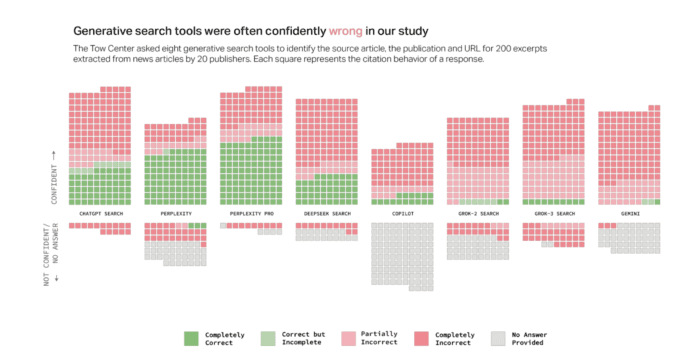

On March 6, Klaudia Jaźwińska and Aisvarya Chandrasekar, researchers at the Tow Center for Digital Journalism at Columbia University, added new hard numbers to this conversation, and they’re pretty damning. For their study “AI search has a citation problem,” they conducted 200 tests on eight different AI search engines: ChatGPT Search, Perplexity, Perplexity Pro, Gemini, DeepSeek Search, Grok-2 Search, Grok-3 Search, and Copilot.

Each test query provided the search engine with a quote from an article, then prompted the chatbot to respond with the article title, date of publication, publication name, and a URL. Across the 1600 test queries, the search engines failed to retrieve the correct information more than 60% of the time.

Perplexity, which brands itself as a tool for research, had the lowest failure rate, answering incorrectly 37% of the time. Meanwhile, Grok-3 Search had the highest failure rate at 94%. The chatbot, which is available to X Premium+ subscribers, until recently cost $40 per month. Still, it managed to score even worse than Grok-2, the free version of the same tool, in their tests.

Another problem outlined by Jaźwińska and Chandrasekar is how confidently these search engines are wrong. While some chatbots are built to acknowledge when they don’t know an answer, many of the most popular AI search engines on the market lean towards blind confidence in their response language. This can make it even more difficult for users to determine when they should be skeptical of a response’s accuracy.

Across the 134 incorrect citations given by ChatGPT in their tests, for example, the chatbot only used hedging language in 15 of those responses. Copilot was the one exception, since it declined to answer a majority of the questions it was asked.

Broken URLs were also persistent in Jaźwińska and Chandrasekar’s tests, though the issue varied greatly from search engine to search engine. Gemini and Grok 3 were the worst offenders, as the only two chatbots that provided more fabricated links than correct links across the 200 tests. Grok 3, for example, pointed users to 404 error pages 154 times.

The Tow Center report comes just as increased attention is being paid to the failure of AI search engines to actually drive traffic to news publishers. A recent study by TollBit, a startup selling digital publishers tools to monetize scraping by AI companies, found that “chatbots on average drive referral traffic at a rate that is 96% lower than traditional Google search.”

Last week, Generative AI in the Newsroom, a project from Northwestern University, published the first in a series of articles analyzing Comscore data from AI chatbots. In its first analysis of ChatGPT and Perplexity data, which covered five months in 2024, sites in the news publisher category only received 3.2% of ChatGPT’s filtered traffic and 7.4% of Perplexity’s filtered traffic.

AI search’s citations problem is one more reason to be skeptical of AI search as a burgeoning source of referral traffic. Unless AI companies are first able to ensure their search engines are producing consistently accurate citations of news publishers’ stories, there is little reason to believe these search engines will be viable referrers, or a comparable replacement to traditional search.

You can read the full study, “AI search has a citation problem,” at Columbia Journalism Review.

Great Job Andrew Deck & the Team @ Nieman Lab Source link for sharing this story.