ProPublica is a nonprofit newsroom that investigates abuses of power. Sign up to receive our biggest stories as soon as they’re published.

Future of cancer coverage for women federal firefighters uncertain under Trump

February 24, 2025

Union Leader Rips Agency Chief for Letting ‘Unelected and Unhinged’ Musk Threaten Workers | Common Dreams

February 24, 2025

Hours after Donald Trump was sworn in as president, users spread a false claim on Facebook that Immigration and Customs Enforcement was paying a bounty for reports of undocumented people.

“BREAKING — ICE is allegedly offering $750 per illegal immigrant that you turn in through their tip form,” read a post on a page called NO Filter Seeking Truth, adding, “Cash in folks.”

Check Your Fact, Reuters and other fact-checkers debunked the claim, and Facebook added labels to posts warning that they contained false information or missing context. ICE has a tip line but said it does not offer cash bounties.

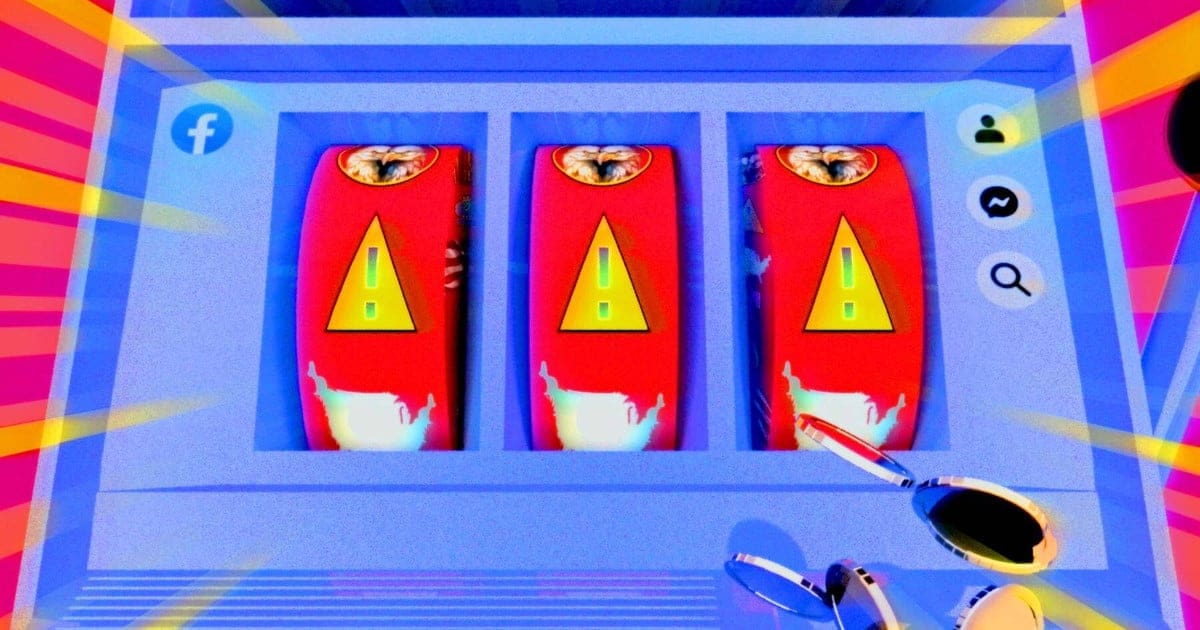

This spring, Meta plans to stop working with fact-checkers in the U.S. to label false or misleading content, the company said on Jan. 7. And if a post like the one about ICE goes viral, the pages that spread it could earn a cash bonus.

Meta CEO Mark Zuckerberg also said in January that the company was removing or dialing back automated systems that reduce the spread of false information. At the same time, Meta is revamping a program that has paid bonuses to creators for content based on views and engagement, potentially pouring accelerant on the kind of false posts it once policed. The new Facebook Content Monetization program is currently invite-only, but Meta plans to make it widely available this year.

The upshot: a likely resurgence of incendiary false stories on Facebook, some of them funded by Meta, according to former professional Facebook hoaxsters and a former Meta data scientist who worked on trust and safety.

What We’re Watching

During Donald Trump’s second presidency, ProPublica will focus on the areas most in need of scrutiny. Here are some of the issues our reporters will be watching — and how to get in touch with them securely.

We’re trying something new. Was it helpful?

ProPublica identified 95 Facebook pages that regularly post made-up headlines designed to draw engagement — and, often, stoke political divisions. The pages, most of which are managed by people overseas, have a total of more than 7.7 million followers.

After a review, Meta said it had removed 81 pages for being managed by fake accounts or misrepresenting themselves as American while posting about politics and social issues. Tracy Clayton, a Meta spokesperson, declined to respond to specific questions, including whether any of the pages were eligible for or enrolled in the company’s viral content payout program.

The pages collected by ProPublica offer a sample of those that could be poised to cash in.

Meta has made debunking viral hoaxes created for money a top priority for nearly a decade, with one executive calling this content the “worst of the worst.” Meta has a policy against paying for content its fact-checkers label as false, but that rule will become irrelevant when the company stops working with them. Already, 404 Media found that overseas spammers are earning payouts using deceptive AI-generated content, including images of emaciated people meant to stoke emotion and engagement. Such content is rarely fact-checked because it doesn’t make any verifiable claims.

With the removal of fact-checks in the U.S., “what is the protection now against viral hoaxes for profit?” said Jeff Allen, the chief research officer of the nonprofit Integrity Institute and a former Meta data scientist.

“The systems are designed to amplify the most salacious and inciting content,” he added.

In an exchange on Facebook Messenger, the manager of NO Filter Seeking Truth, which shared the false ICE post, told ProPublica that the page has been penalized so many times for sharing false information that Meta won’t allow it to earn money under the current rules. The page is run by a woman based in the southern U.S., who spoke on the condition of anonymity because she said she has received threats due to her posts. She said the news about the fact-checking system ending was “great information.”

Clayton said Meta’s community standards and content moderation teams are still active and reiterated the company’s Jan. 7 statement that it is working to ensure it doesn’t “over-enforce” its rules by mistakenly banning or suppressing content.

Meta’s changes mark a significant reversal of the company’s approach to moderating false and misleading information, reframing the labeling or downranking of content as a form of censorship. “It’s time to get back to our roots around free expression on Facebook and Instagram,” Zuckerberg said in his announcement. His stance reflects the approach of Elon Musk after acquiring Twitter, now X, in 2022. Musk has made drastic cuts to the company’s trust and safety team, reinstated thousands of suspended accounts including that of a prominent neo-Nazi and positioned Community Notes, which allows participating users to add context via notes appended to tweets, as the platform’s key system for flagging false and misleading content.

Zuckerberg has said Meta will replace fact-checkers and some automated systems in the U.S. with a version of the Community Notes system. A Jan. 7 update to a Meta policy page said that in the U.S. the company “may still reduce the distribution of certain hoax content whose spread creates a particularly bad user or product experience (e.g., certain commercial hoaxes).”

Clayton did not clarify whether posts with notes appended to them would be eligible for monetization. He provided links to academic papers that detail how crowdsourced fact-checking programs like Community Notes can be effective at identifying misinformation, building trust among users and addressing perceptions of bias.

A 2023 ProPublica investigation, as well as reporting from Bloomberg, found that X’s Community Notes failed to effectively address the misinformation about the Israel-Hamas conflict. Reporting from the BBC and Agence France-Presse showed that X users who share false information have earned thousands of dollars thanks to X’s content monetization program, which also rewards high engagement.

Keith Coleman, X’s vice president of product, previously told ProPublica that the analysis of Community Notes about the Israel-Hamas conflict did not include all of the relevant notes, and he said that the program “is found helpful by people globally, across the political spectrum.”

Allen said it takes time, resources and oversight to scale up crowdsourced fact-checking systems. Meta’s decision to scrap fact-checking before giving the new approach time to prove itself is risky, he said.

“We could in theory have a Community Notes program that was as effective, if not more effective, than the fact-checking program,” he said. “But to turn all these things off before you have the Community Notes thing in place definitely feels like we’re explicitly going to have a moment with little guardrails.”

Before Facebook began cracking down on content in late 2016, American fake news peddlers and spammers based in North Macedonia and elsewhere cashed in on viral hoaxes that deepened political divisions and played on people’s fears.

One American, Jestin Coler, ran a network of sites that earned money from hoax news stories for nearly a decade, including the infamous and false viral headline from 2016 “FBI Agent Suspected In Hillary Email Leaks Found Dead In Apparent Murder-Suicide.” He previously told NPR that he started the sites as a way to “infiltrate the echo chambers of the alt-right.” Coler said he earned five figures a month from the sites, which he operated in his spare time.

When people clicked on the links to the stories in their news feed, they landed on websites full of ads, which generated revenue for Coler. That’s become a tougher business model since Meta has made story links less visible on Facebook in recent years.

Facebook’s new program to pay publishers directly for viral content could unlock a fresh revenue stream for hoaxsters. “It’s still the same formula to get people riled up. It seems like it could just go right back to those days, like overnight,” Coler told ProPublica in a phone interview. He said he left the Facebook hoax business years ago and won’t return.

In January, ProPublica compiled a list of pages that had been previously cited for posting hoaxes and false content and discovered dozens more through domain and content searches. The pages posted false headlines designed to spark controversy, such as “Lia Thomas Admits: ‘I Faked Being Trans to Expose How Gullible the Left Is’” and “Elon Musk announced that he has acquired MSNBC for $900 million to put an end to toxic programming.” The Musk headline was paired with an AI-generated image of him holding a contract with the MSNBC logo. It generated over 11,000 reactions, shares and comments.

Most of the pages are managed by accounts outside of the U.S., including in North Macedonia, Vietnam, the Philippines and Indonesia, according to data from Facebook. Many of these pages use AI-generated images to illustrate their made-up headlines.

One network of overseas-run pages is connected to the site SpaceXMania.com, an ad-funded site filled with hoax articles like “Elon Musk Confronts Beyoncé Publicly: ‘Stop Pretending to Be Country, It’s Just Not You.’” SpaceX Fanclub, the network’s largest Facebook page, has close to 220,000 followers and labels its content as satire. One of its recent posts was a typo-laden AI-generated image of a sign that said, “There Are Only 2 2 Genders And Will Ban Atheletes From Women Sports — President.”

SpaceXMania.com’s terms and conditions page says it’s owned by Funky Creations LTD, a United Kingdom company registered to Muhammad Shabayer Shaukat, a Pakistani national. ProPublica sent questions to the site and received an email response signed by Tim Lawson, who said he’s an American based in Florida who works with Shaukat. (ProPublica was unable to locate a person by that name in public records searches, based on the information he provided.)

“Our work involves analyzing the latest trends and high-profile news related to celebrities and shaping it in a way that appeals to a specific audience — particularly conservatives and far-right groups who are predisposed to believe certain narratives,” the email said.

Lawson said they earn between $500 and $1,500 per month from web ads and more than half of the traffic comes from people clicking on links on Facebook. The pages are not currently enrolled in the invitation-only Facebook Content Monetization program, according to Lawson.

The SpaceXMania pages identified by ProPublica were recently taken offline. Lawson denied that they were removed by Meta and said he deactivated the pages “due to some security reasons.” Meta declined to comment.

It remains to be seen how hoax page operators will fare as Meta’s algorithmic reversals take hold and the U.S. fact-checking program grinds to a halt. But some Facebook users are already taking advantage of the loosened guardrails.

Soon after Zuckerberg announced the changes, people spread a fake screenshot of a Bloomberg article headlined, “The spark from Zuckerberg’s electric penis pump, might be responsible for the LA fires.”

“Community note: verified true,” wrote one commenter.

Mollie Simon contributed research.

Great Job by Craig Silverman & the Team @ ProPublica Source link for sharing this story.